Find the duplicated number in an Array.

Find the duplicate numbers in an Array

Today I was asked an interesting question during an interview. The problem is that find the duplicated number in an array where the array consists of numbers from 1 to n plus a duplicated number.

One example, given [1,2,3,2], the algorithm should return 2.

Solution 1 Hashmap:

It is pretty easy just to use a hashmap to store the frequency for each number and return the number with frequency as 2.

With python it is just one line:

The time complexity and space complexity are both O(n).

Solution 2 Sorting:

If we want to optimize the space complexity, we can sort the array first, and iterate the array and return the element which is the same as its predecessor. The code looks like this:

The time complexity is O(N log N) and space complexity is O(1)

Solution 3 Cycle sort:

Cycle sort is a very efficient algorithm if the element of the input array is known before. The idea is to put number i into the position ith + 1 in the array, so we can sort the array with O(n) time complexity.

To make it easy to understand, we can allocate a new array with the same size as the input array, and move each element of the input array to its correct position in the new array. For example, 1 should go to index 0, 2 should go to index 1, etc..

The code looks like this:

Based on the idea, we can further improve the space complexity by doing in-place cycle sort, if we find a number is already in place, we do nothing,

otherwise, we put them to the correct place by swapping. If the desired place already has a correct number, this means we find a duplicated number.

The final solution gives us O(n) time complexity and O(1) space complexity. If you know a better solution, feel free to leave a comment.

Labels: Algorithm

Tuesday, December 22, 2020

2020 recap

2020 is hard for everyone but maybe this is the best year for the next 10 years.

Some fun facts about my 2020:

- Biggest achievement: Finally get my German driver's license.

- Pity: Got an offer from Facebook but could not take due to family reason.

- The best game: Red Redemption 2.

- The most enjoyable work: Designed a message-like Java library and got praised by team members.

- The best movie: None

- The best TV series: The Queen's Gambit

- Gained 5 Kg weight.

- Last but not least, not be affected by Covid-19 so far. 😁

Tuesday, August 21, 2018

API rate limiting use Zuul and Redis

Yes, this is just another blog about API rate limiting. There are already tons of articles about this topic all over the Internet [1] [2], so this blog is not about the benefit and algorithm of API rate limiting but some practical problems when we implement API rate limiting in our API Gateway platform.

Problems

- Various rate limiting criteria.

Besides the normal "my endpoint can only be accessed X times in Y minutes/hours" situation, we need the rule of API rate limiting to be more flexible and configurable. For example, you can protect your API with a certain threshold, however, if there is an attacker who brutes force this API, this threshold will be easily reached and the “good” users will be blocked

Sometimes we need rate limit API based on client IP and sometimes we need based on user ID or user token.

- Be agile when facing incidents.

Incidents and outrage are normal. Instead of trying to eliminate them we should be agile when they happen. We need to change the rate limiting rule in real time, restart the application to load some new value from an external place is not an option.- Non-invasive integration with the service.

So for every API needs to be protected by rate limiting, you need to duplicate some code and release a new version. As a self-managed platform, we want to provide an easier way for engineers.

Solutions

After rounds of discussion, we came up a configuration like this

It is very straightforward except for the "keys" field. The field is used to generate a key for each request matched by the "pattern", in the example above, the "keys" only contains an "IP", then the for each unique IP, the thresholds are 50 counts in every minute and 500 counts in every hour.

Other details:

- We use the RateLimitJ as our rate limiting SDK which provides both Redis and memory based repository.

- We extend the Netflix Archaius, we store the configuration as a JSON string in Dynamodb and in the application we use the JSON string as a dynamic object. Every time the Dynamodb has a new value, the dynamic object will refresh automatically.

- A new custom Zuul filter is added, when a request is limited, Zuul will return it immediately without routing.

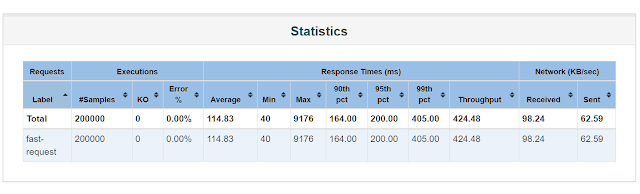

Performance

We did some stress tests about API rate limiting, the environment set is:

- Zuul: EC2 c5.2xlarge, with 10GB JVM, G1 garbage collector

- Redis: cache.r4.large

- Downstream service: a cluster with 10 instance

Jmeter set up: 50 threads, each thread makes 4000 requests.

Here are the results of 3 round tests:

|

Fig. 3. With rate limiting of 100000 requests/hour

|

- The Redis based rate limiting will introduce 3 to 5 milliseconds latency, which is also verified by our debug log.

- After the API is rate limited, Zuul will return "TOO_MANY_REQUESTS" without routing request to downstream service. In production, this will reduce the average response time because the p50 response time is around 300 milliseconds.

Tuesday, June 12, 2018

Using HTTP Traffic Stream and Kinesis Analytics to identify abnormality.

This blog is from a project I am working recently. In order to protect some sensitive data, some content will be changed, however, the case is a real case in our production.

Background and motivation

The internet is a dangerous place, for our company, some malicious users try to call an API by a script for some benefits. The company lost money on this and the system waste resource on these requests.

Usually, the solution is based on analyzing the ELB/ALB access log, however, the format of the ELB/ALB access log is as follows.

timestamp elb client:port backend:port request_processing_time backend_processing_time response_processing_time elb_status_code backend_status_code received_bytes sent_bytes "request" "user_agent" ssl_cipher ssl_protocolAs the format says, the information about the client for each request is very general. Based on the information provided by the access log, people usually end up blocking IP or CIDR addresses. Blocking IP is problematic since the attacker could change the IP easily and an IP could be shared one.

Besides, the latency is unpredictable, at first, the ELB/ALB needs to push the log to S3 after that you need to step a Lambda function to retrieve the S3 file. However, your Lambda function will only be triggered after the file is created, it is not real-time.

Switch to an information-rich data source

As the previous blog says, I am working on the API Gateway service for the whole company these days. One purpose of this project is to stream all HTTP Stream to one place so other engineers could build their services based on this stream.

For each HTTP request, we generate an event and send it to Kinesis.

This is the JSON format for one HTTP request and the corresponding response.

As the JSON object shows, the information is much rich than the ELB/ALB access log, it has everything about an HTTP request and we can add more fields to this object such as response time, etc..Analyzing the data using Kinesis Analytics

After streaming all the data we need, we can use Kinesis Analytics to generate the output we need.Let's assume there are some attackers try to brute force the API http://login.company.com/login and the authorization is unique for each user. On this API we put a constraint of 5 times per minute for each user. So we can easily write a simple SQL in Kinesis Analytics application:

The BRUTE_FORCING_DETECTION_STREAM is the output stream and we can connect it to a Lambda function, the function looks like this:

This Lambda function will be executed in every one second, and once there is an event in the BRUTE_FORCING_DETECTION_STREAM, the Lambda will receive the authorization header and call API Gateway Service to block this authorization header. The data flow likes this:

The latency

The key to identifying this kind of malicious user is the latency. If it takes 10 minutes to block a user, he may already finish his job. For testing, I wrote a simple program to access this API with fix interval.

After a while, the program stopped and this was the outputWrong:{"errorCode":"ZUUL-EDGE-0001","operationMessage":"Your request has been throttled.","message":"Your request has been throttled.","fields":null}, execute 11 requests.

Executing 112 second, 11 requestsSo, this "attacker" succeeded 11 times before being blocked for 2 minutes. Our API Gateway service pulls configuration every one minute, so actually, it only took the Kinesis Analytics 1 minute to spot this "attacker" which was exactly the length of our sliding window.

The reusable stream data

As stated before, we don't want to solve problems case by case, we want to deliver something general enough to be reused in the system. The HTTP traffic stream is an example. This is just one use case and based on the data and the other AWS services the potential is huge. For example, this is an article to show how to build real-time hotspot detection for a TAXI-hailing company using the Kinesis Stream machine learning algorithm.

Conclusion

In the blog, we show that with some in-house solutions combined with some AWS service, we are able to build a real-time system for monitoring our HTTP traffic for multiple use cases.

Tuesday, May 15, 2018

Service isolation in Zuul

These days I am working on a company-wide API gateway. The idea is to hide all microservices behind this gateway. We build this gateway based on Netflix Zuul. When building this kind of company-wise infrastructure a typical question is how to isolate the resource between multiple users. In the field of database or virtualization, it is called multi-tenant.

Why do we need service isolation in Zuul.

This is a GitHub issue about Zuul:

Q:

We are planning using Zuul in production as an API gateway. I am not sure the behavior of Zuul in this specific scenario.

Q:

We are planning using Zuul in production as an API gateway. I am not sure the behavior of Zuul in this specific scenario.

Suppose there are two back-end services A and B sitting behind Zuul. Service A is a slow one but with a lot of traffic. What will happen to the clients visiting Service B through Zuul?

On the clients' side, Service B will be unavailable because Service A slow down the Zuul so there is no resource to handle the request for Service B. I'm not sure about this, and any advice or experience will be much appreciated.

A:

At the end of the day, yes if Zuul runs out of threads to handle incoming requests because a service downstream is behaving badly, yes you wont be able to proxy any other requests to other services. However that is what we integrate Hystrix with Zuul so that you can protect Zuul from a situation like this. You could also do things like adjust timeout values to make sure that slow requests timeout after an appropriate amount of time.

In a nutshell, we need to reduce the blast radius and optimize our resource usage since the API gateway is a critical path of the whole system.

Failure isolation

According to the response of the community, if we are using the Ribbon-based routing filter in Zuul, we can easily isolate any failing microservice from other services.

Fig 1. Failure isolation when service B is dead

When every HTTP request is wrapped by a Ribbon command (it is Hystrix command under the hood) and a microservice is dead, instead of time out every request, the Hystrix will open the circuit for this microservice and keep the circuit open until this microservice is alive again.

Resource isolation

According to the response of the Spring community, once every HTTP request is wrapped by a Hystrix command and with proper Hystrix command key set up, we can isolate failures between microservices. But Hystrix can not help to isolate resources between each microservice.

What does resource mean in our context?

Every system has limited resources. In our case, our Zuul is a web application running in a web container. Most of the web containers like Tomcat, Jetty, Undertow, they maintain a worker thread pool to handle HTTP requests. During the life cycle of an HTTP request, a worker thread is dedicated to this HTTP request. We treat the worker thread pool as our main resource in Zuul, and we want to distribute this resource among multiple microservices based on some algorithm and configuration.

From Zuul's perspective, it doesn't differentiate HTTP requests between microservices and we don't want to end up with an overcomplicated customized thread pool. We use the JDK semaphore to isolate the resource usage between each microservice.

The idea is that suppose we have N threads in the worker thread pool, and there are 3 microservices behind Zuul. In order to share these N threads evenly between these 3 services, we set up 3 semaphores each with the number of N/3. Every HTTP request for each microservice needs to acquire the corresponding semaphore before being routed. If there are too many requests for one microservice, the Zuul will simply return HTTP 490(Too Many Requests) without actually routing the request.

Fig 2. Resource isolation when service B is overflowed

In order to fully use the hardware resource, we also introduce another factor called the over-sell-factor, so the sum of numbers of all semaphores will equal over-sell-factor × thread-number.

Test

In order to validate the service isolation, I did a simple test in our QA environment. I set up 2 microservices and one Zuul instance with 16 worker threads. I used the Apache AB to run the load test, the commands are:

- ab -n 10000 -c 20 http://service-a.elasticbeanstalk.com/info

- ab -n 10000 -c 9 http://service-b.elasticbeanstalk.com/info

Both service-a and service-b are CNAME points to Zuul.

So for service A, the currency is 20 and for service, B is 9. We run the commands at the same time and here is the result. From the output of Apache AB, some requests for service A failed because of the isolation while all the requests for service B succeeded.

Fig 3. Result with service isolation on

Work in the future

Currently, we have two strategies to distribute resources, one is the fair and the other one is weighted one. But ideally, this kind of distribution should adjust dynamically according to the current status and load of the System.

About me

I am Sicheng, I started working as a back-end engineer in Berlin 8 months ago. Before that, I was a senior engineer of the middle-ware technology team in Alibaba in China.